Snowflake

Step-by-step guide on setting up Snowflake as a destination in RudderStack.

Snowflake is a cloud-based data warehouse provided as Software-as-a-Service (SaaS). It offers all the modern data warehouse features like scalability, ease of use, secure access to your data, advanced analytics capabilities, and more.

RudderStack lets you configure Snowflake as a destination to seamlessly store your event data.

Setting user permissions in Snowflake

To enable RudderStack access, make sure you have an ACCOUNTADMIN, or an account that has the MANAGE GRANTS privilege.

The following sections illustrate how to set up a virtual warehouse, a database, a role, and an user in Snowflake:

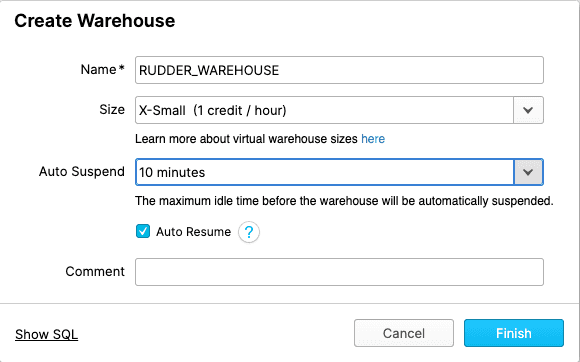

Creating a virtual warehouse

In your Snowflake console, create a X-Small warehouse:

Alternatively, you can create a new warehouse by running the following SQL commands:

CREATE WAREHOUSE "RUDDER_WAREHOUSE" WITH WAREHOUSE_SIZE = 'XSMALL' WAREHOUSE_TYPE = 'STANDARD' AUTO_SUSPEND = 600 AUTO_RESUME = TRUE;AUTO_SUSPEND to ~10 mins and enable AUTO_RESUME to avoid any extra costs.Creating a database

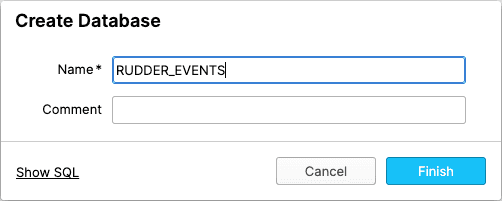

Create a new database to avoid conflicts with your existing data, as RudderStack creates its own tables while storing your events.

The following image demonstrates the Create Database option in Snowflake.

Alternatively, you can create a new database by running the following SQL command:

CREATE DATABASE "RUDDER_EVENTS";Creating a role for RudderStack

Run the following SQL commands to create a new role with the required permissions to load your data into the newly-created warehouse:

- Create a new role called

RUDDER:

CREATE ROLE "RUDDER";- Grant access to the warehouse

RUDDER_WAREHOUSE:

GRANT USAGE ON WAREHOUSE "RUDDER_WAREHOUSE" TO ROLE "RUDDER";- Grant access to the database

RUDDER_EVENTS:

GRANT USAGE ON DATABASE "RUDDER_EVENTS" TO ROLE "RUDDER";GRANT CREATE SCHEMA ON DATABASE "RUDDER_EVENTS" TO ROLE "RUDDER";GRANT ALL ON ALL SCHEMAS IN DATABASE "RUDDER_EVENTS" TO ROLE "RUDDER";Creating a user

Finally, create a user to connect RudderStack to the newly-created Snowflake warehouse using the following SQL query:

CREATE USER "RUDDER_USER" MUST_CHANGE_PASSWORD = FALSE DEFAULT_ROLE = "RUDDER" PASSWORD = "<your_password>";GRANT ROLE "RUDDER" TO USER "RUDDER_USER";Configuring Snowflake destination in RudderStack

To start sending data to Snowflake, you will first need to add it as a destination in RudderStack and connect it to a data source. Once the destination is enabled, the events will automatically start flowing to your Snowflake instance.

Follow these steps to configure your Azure data lake as a destination in RudderStack:

- From your RudderStack dashboard, configure the data source. Then, select Snowflake from the list of destinations.

- Assign a name to your destination and click on Next.

Connection settings

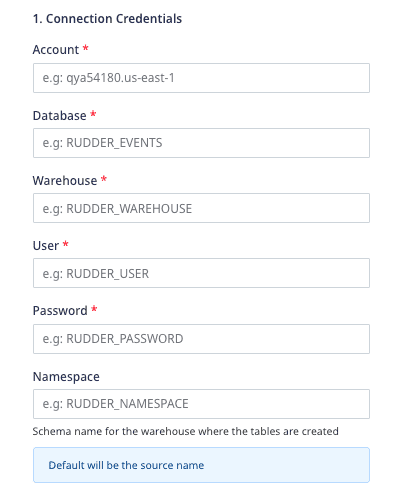

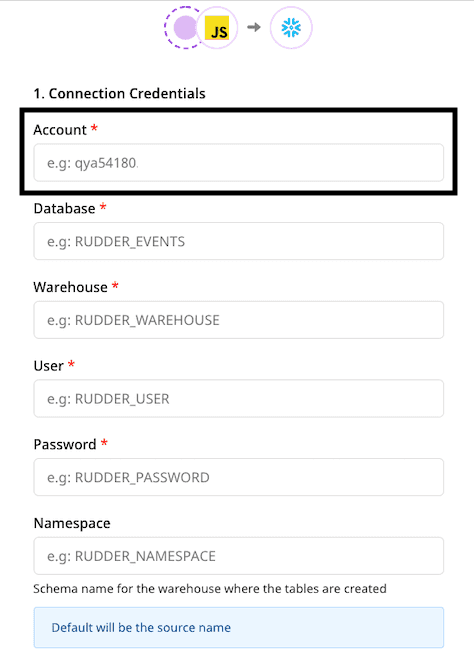

Enter the following credentials in the Connection Credentials page:

- Account: Enter the account ID of your Snowflake warehouse. This account ID is part of the Snowflake URL.

The following examples illustrate the slight differences in the account ID for various cloud providers:

| Account ID example | Corresponding Snowflake URL | Snowflake cloud provider |

|---|---|---|

qya56091.us-east-1qya56091.us-east-2.aws | https://qya56091.us-east-1.snowflakecomputing.com https://qya56091.us-east-2.aws.snowflakecomputing.com | AWS |

rx18795.east-us-2.azure | https://rx18795.east-us-2.azure.snowflakecomputing.com | Microsoft Azure |

ah76025.us-central1.gcp | https://ah76025.us-central1.gcp.snowflakecomputing.com | Google Cloud Platform |

.aws is present in the account locator of some region accounts and hence must be included in the Account field above. For more information on the different account locator formats depending on your region or cloud provider, refer to the Snowflake documentation.

- Database: Enter the name of the database created in the Creating a database section above.

- Warehouse: Enter the name of the warehouse created in the Creating a virtual warehouse section above.

- User: Enter the name of the user created in the Creating a user section above.

- Password: Enter the password you set for the above user.

- Namespace: Enter the schema name for the warehouse where RudderStack will create all the tables.

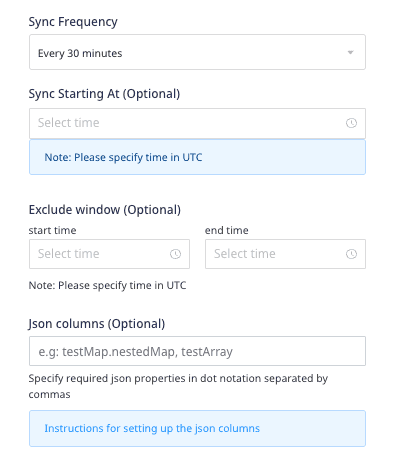

- Sync Frequency: Specify how often RudderStack should sync the data to your Snowflake warehouse.

- Sync Starting At: This optional setting lets you specify the particular time of the day (in UTC) when you want RudderStack to sync the data to the warehouse.

- Exclude Window: This optional setting lets you set a time window when RudderStack will not sync the data to the warehouse.

- JSON Columns: Use this optional setting to specify the required JSON column paths in dot notation, separated by commas. This option applies to all the incoming

trackevents for this destination.

Configuring the object storage

RudderStack lets you configure the following object storage configuration settings while setting up your Snowflake destination:

- Use RudderStack-managed Object Storage: Enable this setting to use RudderStack-managed buckets for object storage.

- Choose your Cloud: Select the cloud provider for your Snowflake instance.

Refer to the following settings depending on your cloud provider:

- Staging S3 Storage Bucket Name: Specify the name of your S3 bucket where RudderStack will store the data before loading it into Snowflake.

- Prefix: If specified, RudderStack will create a folder in the bucket with this prefix and push all the data within that folder.

- Storage Integration: Refer to the Configuring cloud storage integration with Snowflake section for details.

- AWS Access Key ID: Enter your AWS access key ID obtained from the AWS console.

- AWS Secret Access Key: Enter the AWS secret access key. Refer to this Permissions section for more information.

- Enable Server-side Encryption for S3: Toggle on this setting to enable server-side encryption for your S3 bucket.

- Staging Azure Blob Storage Container Name: Specify the name of your Azure container where RudderStack will store the data before loading it into Snowflake.

- Prefix: If specified, RudderStack will create a folder in the bucket with this prefix and push all the data within that folder.

- Storage Integration: Refer to the Configuring cloud storage integration with Snowflake section for details.

- Azure Blob Storage Account Name: Enter the account name for the Azure container.

- Azure Blob Storage Account Key: Enter the account key for your Azure container. Refer to the Blob Storage settings section for more information.

- Staging GCS Object Storage Bucket Name: Specify the name of your GCS bucket where RudderStack will store the data before loading it into Snowflake.

- Prefix: If specified, RudderStack will create a folder in the bucket with this prefix and push all the data within that folder.

- Storage Integration: Refer to the Configuring cloud storage integration with Snowflake section for details.

- Credentials: Paste the contents of your GCP service account credentials JSON. The service account should have a role with

storage.objectCreatoraccess.

Configuring cloud storage integration with Snowflake

This section lists the steps required to configure the Storage Integration setting specified in the Configuring the object storage section above, depending on your cloud provider.

AWS

If you have Amazon Web Services (AWS) as your cloud provider and want to use S3 as your object storage, you will need to follow a few more steps to configure your cloud storage integration with Snowflake. You can find the detailed instructions in this Snowflake documentation.

- Create a policy in AWS: In the following JSON, replace

<bucket_name>and<prefix>with the name of your S3 bucket and the prefix set in the Configuring the object storage section above, and create the policy with a name of your choice.

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:PutObject", "s3:GetObject", "s3:GetObjectVersion", "s3:DeleteObject", "s3:DeleteObjectVersion" ], "Resource": "arn:aws:s3:::<bucket_name>/<prefix>/*" }, { "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::<bucket_name>", "Condition": { "StringLike": { "s3:prefix": ["<prefix>/*"] } } } ]}Create a role and attach the above policy in AWS: Follow the steps listed below:

- Create a role of type Another AWS account.

- Enter your AWS account ID and enable the Require External ID option.

- For external ID, you can add a dummy value like 0000. This can be modified later.

- Attach the policy created in Step 1. Assign a name to this role and keep the role ARN handy for the next step.

- Create the cloud storage integration in Snowflake: Replace

<integration_name>with the name of your choice and<iam_role>with the role ARN obtained in Step 2 and run the following command:

CREATE STORAGE INTEGRATION <integration_name> TYPE = EXTERNAL_STAGE STORAGE_PROVIDER = S3 ENABLED = TRUE STORAGE_AWS_ROLE_ARN = '<iam_role>' STORAGE_ALLOWED_LOCATIONS = ('s3://<bucket_name>/<path>/', 's3://<bucket_name>/<path>/') [ STORAGE_BLOCKED_LOCATIONS = ('s3://<bucket_name>/<path>/', 's3://<bucket_name>/<path>/') ]<integration_name>.- Retrieve the AWS IAM user for your Snowflake account as shown:

DESC INTEGRATION <integration_name>;- Grant the IAM user permissions to access the bucket objects in AWS. Choose the role you created in Step 2 and edit the trust relationship as shown in the following JSON:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "", "Effect": "Allow", "Principal": { "AWS": "<snowflake_user_arn>" }, "Action": "sts:AssumeRole", "Condition": { "StringEquals": { "sts:ExternalId": "<snowflake_external_id>" } } } ]}Note that <snowflake_user_arn> is the STORAGE_AWS_ROLE_ARN option seen in Step 4, whereas <snowflake_external_id> is the STORAGE_AWS_EXTERNAL_ID.

- Grant integration access to the Snowflake role you created in the Creating a role for RudderStack section by running the following command:

GRANT usage ON integration <integration_name> TO ROLE "RUDDER";Here, <integration_name> is the name of the integration created in the Step 3.

Note that while sending the data to Snowflake, RudderStack uses an external location instead of a stage in its queries. Hence, the following command listed in the Snowflake documentation is not required:

GRANT CREATE STAGE ON SCHEMA PUBLIC public to role myrole;Azure

If you have Microsoft Azure as your cloud provider and want to leverage Azure Blob Storage as your object storage, you will need to follow a few more steps to configure your storage integration with Snowflake. You can find the detailed instructions in this Snowflake documentation.

- Create a storage account and container in Azure: First, create a storage account in Azure. Then, navigate to Storage Explorer > Blob Containers > Create a Blob Container.

- Run the following commands to create a cloud storage integration in Snowflake:

CREATE STORAGE INTEGRATION <integration_name>TYPE = EXTERNAL_STAGESTORAGE_PROVIDER = AZUREENABLED = TRUEAZURE_TENANT_ID = '<tenant_id>'STORAGE_ALLOWED_LOCATIONS = ('azure://<account>.blob.core.windows.net/<container>/<path>/', 'azure://<account>.blob.core.windows.net/<container>/<path>/')[ STORAGE_BLOCKED_LOCATIONS = ('azure://<account>.blob.core.windows.net/<container>/<path>/', 'azure://<account>.blob.core.windows.net/<container>/<path>/') ]You can get your <tenant_id> by navigating to Azure Active Directory > Properties > Directory ID.

- Grant Snowflake access to the storage locations: Run the following command and replace

<integration_name>with the integration name created in Step 2.

DESC INTEGRATION <integration_name>;AZURE_CONSENT_URL and AZURE_MULTI_TENANT_APP_NAME.- Go to the URL obtained in

AZURE_CONSENT_URLand accept the consent requirements. - Grant Snowflake access to the container: Navigate to Azure Services > Storage Accounts and select the storage account created in Step 1.

- Add the role: Navigate to Access Control (IAM) > Add Role Assignment. Select either Storage Blob Data Reader with Read access, or Storage Blob Data Contributor with Read and Write access.

- Add Assign Access: Add Service Principal as the security principal type for the role. Search for

AZURE_MULTI_TENANT_APP_NAMEthat you obtained in Step 3. - Grant integration access to the Snowflake role you created in the Creating a role for RudderStack section by running the following command:

GRANT USAGE ON integration <integration_name> to role "RUDDER";Here, <integration_name> is the integration you created in Step 2.

GCP

If you have Google Cloud Platform as your cloud provider and want to leverage Google Cloud Storage as your object storage, you will need to follow a few more steps to configure your storage integration with Snowflake. You can find the detailed instructions in this Snowflake documentation.

- Create a Cloud Storage integration in Snowflake: Run the following command:

CREATE STORAGE INTEGRATION <integration_name> TYPE = EXTERNAL_STAGE STORAGE_PROVIDER = GCS ENABLED = TRUE STORAGE_ALLOWED_LOCATIONS = ('gcs://<bucket>/<path>/', 'gcs://<bucket>/<path>/')Replace <integration_name> with the name of your Cloud Storage integration, <bucket> with Staging GCS Object Storage Bucket Name, and <path> with the prefix set in the Configuring the object storage section above.

- The following command retrieves the Cloud Storage service account ID created for your Snowflake account, where

<integration_name>is the integration name you specified in Step 1.

DESC STORAGE INTEGRATION <integration_name>;STORAGE_GCP_SERVICE_ACCOUNT. Retrieve this property value. It should be of the format service-account-id@UNIQUE_STRING.iam.gserviceaccount.com.Grant service account permissions to access the bucket objects: Create a custom IAM role with the required permissions to access the bucket and fetch the objects by following these steps:

- Log into the GCP console as a Project Editor.

- From the dashboard, go to IAM & Admin > Roles.

- Click on CREATE ROLE.

- Enter the title and description for the custom role.

- Click on ADD PERMISSIONS.

- Filter the following permissions in the Enter property name or value and add them to the list. Then, click on ADD.

| Permission name |

|---|

storage.buckets.get |

storage.objects.get |

storage.objects.list |

storage.objects.create |

Assign the custom role to the Cloud Storage service account:

- In your GCP console dashboard, go to Cloud Storage > Browser.

- Select the bucket to configure the access.

- Select SHOW INFO PANEL in the upper right corner. The information panel for the bucket will pop out.

- In the Add Members section, get the service account name from the

DESCcommand run in Step 2. - From the Select a role dropdown, select Storage > Custom >

<role>, where<role>is the custom Cloud Storage role. - Click on the ADD button. The service account name will be added to the Storage Object Viewer role dropdown in the information panel.

Grant integration access to the Snowflake role you created in the Creating a role for RudderStack section by running the following command:

GRANT USAGE ON INTEGRATION <integration_name> TO ROLE "RUDDER";Here, <integration_name> is the integration name you set up in Step 1.

IPs to be allowlisted

To enable network access to RudderStack, you will need to allowlist the following RudderStack IPs:

- 3.216.35.97

- 34.198.90.241

- 54.147.40.62

- 23.20.96.9

- 18.214.35.254

- 35.83.226.133

- 52.41.61.208

- 44.227.140.138

- 54.245.141.180

- 3.66.99.198

- 3.64.201.167

- 3.66.99.198

- 3.64.201.167

FAQ

While configuring the Snowflake destination, what should I enter in the Account field?

While configuring Snowflake as a destination in RudderStack, you need to enter your Snowflake connection credentials which include the Account field, as shown below:

The Account field corresponds to the account ID of your Snowflake warehouse and is a part of the Snowflake URL.

The following examples illustrate the slight differences in the Snowflake account ID for various cloud providers:

| Account ID example | Corresponding Snowflake URL | Snowflake cloud provider |

|---|---|---|

qya56091.us-east-1qya56091.us-east-2.aws | https://qya56091.us-east-1.snowflakecomputing.com https://qya56091.us-east-2.aws.snowflakecomputing.com | AWS |

rx18795.east-us-2.azure | https://rx18795.east-us-2.azure.snowflakecomputing.com | Microsoft Azure |

ah76025.us-central1.gcp | https://ah76025.us-central1.gcp.snowflakecomputing.com | Google Cloud Platform |

.aws is present in the account locator of some region's accounts and must be included in the Account field above.For more information on the different account locator formats depending on your region or cloud provider, refer to the Snowflake documentation.

Contact us

For queries on any of the sections covered in this guide, you can contact us or start a conversation in our Slack community.